Figure 1. Sample problem- and example-based sets

KELLY M. MCGINN AND JULIE L. BOOTH

Temple University

DOI: 10.13042/Bordon.2018.62138

Fecha de recepción: 06/12/2017 • Fecha de aceptación: 29/05/2018

Autora de contacto / Corresponding author: Kelly M. McGinn. E-mail: kelly.mcginn@temple.edu

INTRODUCTION. When explaining their reasoning, students should communicate their mathematical thinking precisely, however, it is unclear if formal terminology is necessary or if students can explain or describe mathematical concepts using everyday language. This paper reports the results of two studies. The first explores the relation between students’ use of formal and informal mathematical language and procedural knowledge in mathematics; the second replicates and extends these findings using a longitudinal design over the course of an entire school year. METHOD. Study 1 uses a pre-test, intervention, post-test method across one unit of study. Study 2 uses the same pre-test, intervention, post-test method, however, it implements a longitudinal design across an entire school year using growth curve analysis. RESULTS. Findings show that students benefit most when they attempt to describe the targeted mathematical concepts, regardless of the type of language used. This finding is consistent with prior work showing that having students go through the process of self-explaining, independent of the quality of those explanations, yields benefits to learning (i.e. Chi, 2000Chi, M. T. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. Advances in Instructional Psychology, 5, 161-238.). DISCUSSION. Although teachers should still use formal language in their classrooms; they should not be discouraged if students are initially unable to use formal language correctly. We suggest that teachers allow students to explain their reasoning using either formal or informal terms, especially while students are in the midst of developing an understanding the mathematical concepts.

Keywords: Mathematical instruction, Communication, Language, Algebra.

Many educational organizations stress the precise use of mathematics language (e.g., the United States’ Common Core State Standards, 2010Common Core State Standards Initiative (2010). Common Core State Standards for Mathematics. Washington, DC: National Governors Association Center for Best Practices and the Council of Chief State School Officers. ; the National Council of Teachers of Mathematics, 2010; the United Kingdom Department of Education and Employment’s National Numeracy Strategy, 1999Department for Education and Employment (DfEE) (1999). The National Numeracy Strategy: Framework for Teaching Mathematics from Reception to Year 6. London: DfEE.; Canada’s Ontario Ministry of Education, 2005Ontario Ministry of Education (OME) (2005). Mathematics curriculum grades 1.8. Ottawa, ON: Queen’s Printer for Ontario.). However, the meaning of precise language has been interpreted in a variety of ways. One of the most widely accepted interpretations is that in order for students to communicate precisely, they must use formal mathematical terminology. For instance, the National Numeracy Strategy (NNS) suggests that students “explain their methods and reasoning using correct mathematical terms” (DfEE, 1999Department for Education and Employment (DfEE) (1999). The National Numeracy Strategy: Framework for Teaching Mathematics from Reception to Year 6. London: DfEE.: 4). Barwell (2005Barwell, R. A. (2005). Ambiguity in the mathematics classroom. Language and Education, 19(2), 117-125.) makes the argument that the NNS “views technical terms as being used to convey precise mathematical meaning” (p. 121). While many feel it is important to scaffold students from the use of everyday language towards the use of technical mathematical language (e.g., Leung, 2005Leung, C. (2005). Mathematical vocabulary: Fixers of knowledge or points of exploration? Language and Education, 19(2), 127-135.), others argue that a combination of both formal and everyday language may be more beneficial (e.g., Moschkovich, 2002Moschkovich, J. N. (2002). An introduction to examining everyday and academic mathematical practices. Journal for Research in Mathematics Education Monograph Series, 1-11.). In fact, Barwell (2013Barwell, R. A. (2013). The academic and the everyday in mathematicians’ talk: The case of the hyper-bagel. Language and Education, 27(3), 207-222.) found that professional mathematicians effectively integrate both formal and everyday language into mathematical discourse; therefore, it may be more beneficial to not only improve our students’ precision of formal language, but also that of everyday language used in the mathematics classroom.

This paper will describe two studies. The first explores the relation between students’ use of formal and informal mathematics language and mathematics performance; the second replicates and extends these findings using a longitudinal design over the course of an entire school year.

While it is well accepted that students should communicate precisely, there is a disagreement as to whether teachers should stress the use of formal mathematical language or allow students to explain the concepts using their own everyday language. We refer to formal language as “the standard language used to talk about mathematics, which encodes the meaning of mathematics and which is sometimes referred to as the mathematics register” (Barwell, 2016Barwell, R. A. (2016). Formal and informal mathematical discourses: Bakhtin and Vygotsky, dialogue and dialectic. Educational Studies in Mathematics, 92(3), 331-345. : 333). For instance, this includes using terms such as coefficient, slope, and linear equation when describing algebra concepts. We refer to informal language as the use of non-mathematical, everyday terms or phrases to describe mathematical concepts. For instance, using the phrase the number next to the letter to describe ‘coefficient’, or saying round things when referring to ‘circles’.

While empirical evidence supporting these views is limited, many feel that it is important for students to learn the formal academic terminology because knowledge of this language may be linked to conceptual understanding (e.g., Raiker, 2002Raiker, A. (2002). Spoken language and mathematics. Cambridge Journal of Education, 32(1), 45-60.). Zazkis (2000Zazkis, R. (2000). Using code-switching as a tool for language mathematical language. For the Learning of Mathematics, 20(3), 38-43.) noticed that her pre-service elementary school teachers tended to use informal expressions when they were unsure of the concept, and only used the formal terms when they were confident in their claims – suggesting that the use of informal expressions can be seen as a sign of incomplete conceptual understanding. Zazkis (2000Zazkis, R. (2000). Using code-switching as a tool for language mathematical language. For the Learning of Mathematics, 20(3), 38-43.) resolves “though there might be exceptions to this rule, good language is usually an indication of good understanding… That is to say that while mathematical ideas and understanding can be expressed informally, I have not found indications of inadequate understanding expressed in a correct formal mathematical language” (p. 42).

Others assert that students should learn to use both formal and informal language to explain their reasoning. As mentioned above, Barwell (2013Barwell, R. A. (2013). The academic and the everyday in mathematicians’ talk: The case of the hyper-bagel. Language and Education, 27(3), 207-222.) found that professional mathematicians effectively integrate both formal and everyday language into mathematical discourse. Boulet (2007Boulet, G. (2007). How does language impact the learning of mathematics? Let me count the ways. Journal of Teaching and Learning, 5(1), 1-12.) stresses that teachers should not allow students to use “just any kind of talk” (p. 8). She gives the example of not allowing students to continue to call circles ‘round things,’ but to allow them to say take away rather than ‘subtract.’ Schleppegrell (2007Schleppegrell, M. J. (2007). The linguistic challenges of mathematics teaching and learning: A research review. Reading & Writing Quarterly, 23(2), 139-159.) stresses “in contextual and regulative contexts, teachers may foreground the more everyday language, while during procedural and conceptual discussion, more technical language [should be] highlighted” (p. 151). Finally, Leung (2005Leung, C. (2005). Mathematical vocabulary: Fixers of knowledge or points of exploration? Language and Education, 19(2), 127-135.) supports the development of new mathematics terminology “mediated through informal everyday language… Informal and everyday language can play an important facilitative role” (p. 134).

The studies presented in this paper explore the influence of the use of formal and informal language during self-explanation on students’ performance in mathematics. Self-explanation prompts are questions that ask students to explain to themselves what they have learned (Rittle-Johnson, Loehr & Durkin, 2017Rittle-Johnson, B., Loehr, A. M. & Durkin K. (2017). Promoting self-explanation to improve mathematics learning: A meta-analysis and instructional design principles. ZDM, 49(4), 599-611. ), and are often used to encourage students to explain their reasoning precisely. Self-explanation prompts can be used in a variety of ways. For instance, students can be asked to explain their own reasoning to each other (e.g., Webb, Troper, & Fall, 1995Webb, N. M., Troper, J. D. & Fall, R. (1995). Constructive activity and learning in collaborative small groups. Journal of Educational Psychology, 87, 406-423.) or they can be asked to explain the reasoning of others through the use of a worked-example (e.g., Booth, Lange, Koedinger & Newton, 2013Booth, J. L., Lange, K. E., Koedinger, K. R. & Newton, K. J. (2013). Using example problems to improve student learning in algebra: Differentiating between correct and incorrect examples. Learning and Instruction, 25, 24-34.).

Many researchers have documented mathematic procedural knowledge gains through the use of self-explanation prompts. We operationally define procedural knowledge as knowing how to carry out a task (Booth, 2011Booth, J. L. (2011). Why can’t students get the concept of math? Perspectives on Language and Literacy, Spring 2011, 31-35.). Studies have especially noted increased procedural transfer, which is the transfer of knowledge to new contexts or problems (e.g. Rittle-Johnson, 2006Rittle-Johnson, B. (2006). Promoting transfer: Effects of self-explanation and direct instruction. Child Development, 77(1), 1-15. explored 8 to 10 year-olds’ mathematical equivalence knowledge; McEldoon, Durkin & Rittle-Johnson, 2013McEldoon, K. L., Durkin, K. L. & Rittle-Johnson, B. (2013). Is self-explanation worth the time? A comparison to additional practice. British Journal of Educational Psychology, 83(4), 615-632. explored 7 to 9 year-olds’ mathematical equivalence knowledge). Students can develop new procedures with the help of these prompts. For instance, self-explanation prompts can introduce more efficient procedures, such as using mental math and place value rather than the standard algorithm to solve.

Researchers have explored the mediating variables that may influence the effectiveness of self-explanation on learning. One of the most commonly explored mediating variables is the response quality to such prompts. Most explored the differences between principle- and rational-based responses (e.g., Große & Renkl, 2007Große, C. S. & Renkl, A. (2007). Finding and fixing errors in worked examples: Can this foster learning outcomes? Learning & Instruction, 17, 612-634.; Berthold et al., 2009Berthold, K., Eysink, T. H. S. & Renkl, A. (2009). Assisting self-explanation prompts are more effective than open prompts when learning with multiple representations. Instructional Science, 37, 345-363. both explored college students’ explanation of probability problems), or procedural and conceptual responses (e.g., Matthews & Rittle-Johnson, 2009Matthews, P. & Rittle-Johnson, B. (2009). In pursuit of knowledge: Comparing self-explanations, concepts, and procedures as pedagogical tools. Journal of Experimental Child Psychology, 104(1), 1-21. explored 7 to 10 year-olds’ math equivalence knowledge).

Generally, these studies conclude that more accurate and conceptual responses yield increased learning from the experience. However, to date, no one has explored whether using formal verse informal mathematics language when responding impacts procedural knowledge learning outcomes. It is unclear if students who use formal mathematics language while self-explaining increase their procedural knowledge compared to those who use informal language to describe the same mathematics concepts. If the use of formal language is a sign of conceptual understanding and the use of informal language is a sign of incomplete conceptual understanding (Raiker, 2002Raiker, A. (2002). Spoken language and mathematics. Cambridge Journal of Education, 32(1), 45-60.; Zazkis, 2000Zazkis, R. (2000). Using code-switching as a tool for language mathematical language. For the Learning of Mathematics, 20(3), 38-43.) then we would expect that students who attempt to use formal language more often while self-explaining would have greater procedural knowledge gains than those that attempt to use more informal language, since there is a well-established correlation between conceptual and procedural mathematics knowledge (e.g. Rittle-Johnson & Wagner, 1999Rittle-Johnson, B. & Alibali, M. W. (1999). Conceptual and procedural knowledge of mathematics: Does one lead to the other? Journal of Educational Psychology, 91(1), 175.).

The main goal is to determine if precise communication requires the use of formal mathematics terminology or if students can effectively describe mathematical concepts using everyday language when self-explaining. The purpose of Study 1 is to explore the effect of the quality of responses to self-explanation prompts on students’ procedural knowledge. We aim to answer the following research question: Does the use of a specific type of language (i.e., formal or informal) improve the effectiveness of self-explanation prompts on improving students’ procedural knowledge?

The purpose of Study 2 is to determine whether there are distinct formal and informal language use growth trajectories and if those unique trajectories predict procedural knowledge gains; we review the literature relevant to this purpose in our introduction of Study 2. The two research questions are: When self-explaining, do distinct mathematics language use growth trajectories exist? If so, is there a significant difference in students’ procedural knowledge gains among these groups?

Participants

Participants included 260 Algebra I students. Data was collected across two years. During year one, 173 students of two teachers (2 classes each) from a rural school district in the Southeastern United States participated. The sample was 50.3% female and 7.5% limited English proficient (LEP). Students were classified as underrepresented minority (URM; Black, Hispanic, biracial) or non-URM (White, Asian), with 31.2% of the students classified as URM. During year two, 87 students of three teachers (2 or 3 classes each) from a suburban school district in the Midwestern United States participated. The sample was 56.3% female, 11.5% LEP, and 26.4% URM. Classes were randomly assigned to one of two conditions – problem-based (n=123) and example-based (n=137). Each condition will be described in the intervention materials section below.

Intervention Procedure

Teachers administered a pre-test before beginning to teach their Graphing Linear Equations unit. Teachers taught the content of the unit using their own typical teaching methods; however, they were asked to sporadically assign four study-worksheets at times they deemed appropriate during the unit. Unit length varied based on teacher, however units typically lasted about four weeks. In order to ensure ecological validity, teachers were given the freedom to assign the worksheets in any order and were told to treat the assignments as they would any other assignment in their class; although they were instructed to have students complete the assignments during the class period, not for homework. Students were allowed to work together if the teacher typically permitted that behavior. Each assignment took about 20 minutes to complete. At the end of the unit, teachers administered the post-test, which was identical to the pre-test. Also, the school district provided the researchers with various de-identified student demographic data, such as gender, URM and LEP status.

Intervention Materials

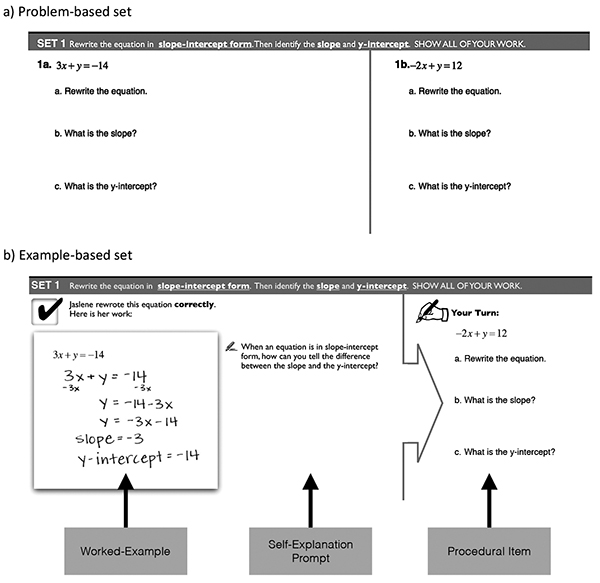

The worksheets of both conditions contained four problem-sets (with two math problems similar to each other per set). Over the course of the unit, all students solved a total of 16 problem-sets. The problem-based worksheets contained four regular problem-sets where students were asked to simply solve each problem, similar to a typical math worksheet. The example-based worksheets replaced one math problem within each set with a worked-example and self-explanation prompt(s). Students in this group were instructed to study the worked-example, answer the self-explanation prompt(s), and complete the second math problem on their own. Each example-based worksheet contained two correct worked-examples and two incorrect worked-examples. See figure 1 for sample problem- and example-based problem sets.

|

Figure 1. Sample problem- and example-based sets

|

Measures

Graphing linear equations procedural knowledge.We operationally define procedural knowledge as knowing how to carry out a mathematical task (Booth, 2011Booth, J. L. (2011). Why can’t students get the concept of math? Perspectives on Language and Literacy, Spring 2011, 31-35.). Students were administered a content assessment as a pretest and posttest. This test was created by the researchers using items representative of the types of items found in Algebra I textbooks and taught in Algebra I courses, ensuring face validity. This test was comprised of 14 procedural items focused on graphing linear equations, which required students to carry out procedures to solve problems; all problems were isomorphic to problems in the worksheets. Cronbach’s alpha indicated that the internal consistency of the measure was sufficient (α = .79).

Quality of self-explanation prompt responses. For the purposes of this study, six of the 16 example-based problem-sets were analyzed. These problem-sets were chosen because the self-explanation prompts required the student to describe a mathematical concept using words, rather than give a numerical or symbolic response. One set contained two self-explanation prompts, yielding a total of seven self-explanation prompts.

Two variables were created to represent the quality of students’ responses. The first determined the rate at which students attempted to describe the target concept. In order to answer each self-explanation prompt correctly, the student needed to correctly describe a specific mathematical concept. The student could attempt to describe this concept using either formal (e.g., coefficient) or informal (e.g., next to the letter) language. This variable captured the number of times the student attempted to describe the target concept using either formal or informal language. If the student did not attempt to describe the concept, they often described a different concept or failed to answer the question entirely.

The second variable, the percentage of formal attempts, represents the ratio between the number of formal attempts and the number of informal attempts. For instance, a student with a score of .20 attempted to use formal language 20% of the time and informal language 80% of the time. Using a coding manual created by both authors, all coding was conducted by a single coder, the first author, using the code-recode strategy.

In order to create groups based on language use, we used a median split. Students were grouped by their attempt to describe the target concept (median = 4; range = 0 – 9), as well as by the percentage of formal language attempts (median = .2; range = 0 – 1). Using these two variables, four experimental groups were formed: 1) high attempts/ high formal (HA/HF), 2) high attempts/ low formal (HA/LF), 3) low attempts/ high formal (LA/HF), and 4) low attempts/ low formal (LA/LF). The fifth group was the original problem-based control group. Students in the HA/HF group attempted to describe the target concept about five times. Of these five times, they attempted to use formal language twice (36%) and informal language three times. Students in the HA/LF group attempted to describe the target concept about five times. Of these five times, they attempted to use formal language less than one time (10%) and informal language more than four times. Students in the LA/HF group attempted to describe the target concept about two times. Of these two times, they attempted to use formal language once (50%) and informal language once. Students in the LA/LF group attempted to describe the target concept about two times, using informal language both times.

A stepwise regression analysis was performed to determine the significance of demographic characteristics on post-test results. Only pre-test scores, URM status and teacher were significant and therefore retained, F(3, 256) = 57.823,r2 = .404, p< .001. LEP, gender, and year of data collection were not significant predictors and therefore were excluded from further analyses. See table 1 for demographic statistics by group.

| Table 1.Demographic Statistics by Group | ||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Preliminary analyses were conducted to confirm validity of the assumptions of ANCOVA. In order to insure independence of covariates and condition, we conducted a one-way ANOVA between each covariate and condition. Group differences were found for pre-test (F = 3.920, p = .004) and teacher (F = 5.458, p < .001). We then tested a GLM including the covariates (pre-test, URM, and teacher), independent variable (condition), and all interaction terms. No interaction terms were significant, therefore none were included in the final model.

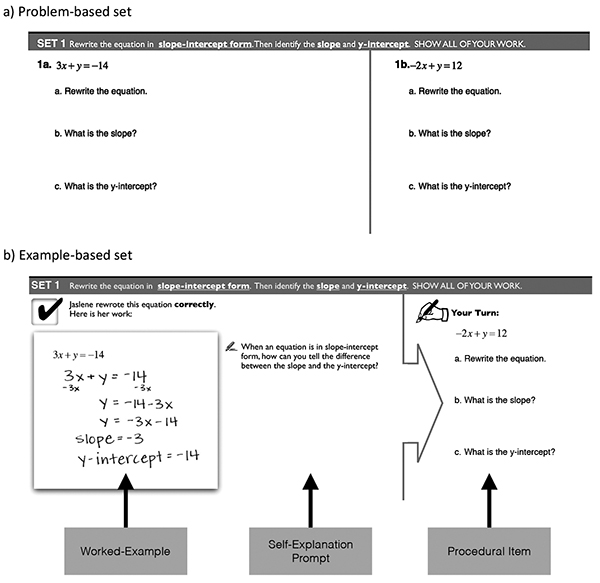

An ANCOVA comparison of post-test scores revealed a significant difference between groups controlling for pre-test, URM status, and teacher; F (4, 252) = 6.575, p < .001, r2 = .472, ηp2 = .095. Equality of variance was assured with a Levene’s Test (α = 1.394; p = .236). See table 2 for results and descriptive statistics.

| Table 2. ANCOVA Results and Descriptive Statistics for Post-Test Procedural Scores by Group | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Pairwise comparisons with Bonferroni correction revealed that students who attempted to describe a high number of target concepts (x̅= 5.18 attempts) using a high percentage of formal language (x̅= 36.4% formal attempts) (HA/HF) scored significantly higher at the post-test compared to those who attempted to describe a low number of concepts (x̅= 2.03) using a low percentage of formal language (x̅= 0%) (LA/LF), p < .001 and marginally higher than the control group, p = .056. Students who attempted to describe a high number of target concepts (x̅= 5.03) and used a low percentage of formal language (x̅= 10.2%) (HA/ LF) scored significantly higher than those how attempted to describe a low number of concepts (x̅= 2.02) using a low percentage of formal language (x̅= 0%) (LA/LF), p = .005. Finally, the control group scored significantly higher than those how attempted to describe a low number of concepts (x̅= 2.03) using a low percentage of formal language (x̅= 0%) (LA/LF), p = .007. See figure 2 for results.

|

Figure 2. Estimated marginal means of post-test procedural scores by group

|

The research question for this study was: Does the use of a specific type of language (i.e., formal or informal) improve the effectiveness of self-explanation prompts on improving students’ procedural knowledge? Based on our results, we have determined that it may not. If the type of language used (i.e., formal or informal) when answering self-explanation prompts impacted students’ acquisition of procedural knowledge, then we would have expected to find differences between the high and low formal groups. However, this did not occur; no differences emerged between HA/HL and HA/LF or between LA/HF and LA/LF. However, attempting to describe the target concept (regardless of whether formal or informal terms are used) does impact procedural knowledge gains; this is reflected in the significant differences found between HA/HF and LA/LF and between HA/LF and LA/LF.

We also wished to determine whether any subgroup of students within the experimental condition outperformed the control group. The only subgroup to score significantly higher on procedural post-test scores compared to the control group was the HA/HF group. When students attempt to describe most of the target concepts (five of seven), using formal language 30% of the time and informal language 70% of the time, studying worked-examples and answering self-explanation prompts is more beneficial to learning than solving regular math problems alone.

In Study 1, we demonstrate that when students are first introduced to content, the act of attempting to explain concepts is impactful on learning, regardless of whether students use formal or informal language to do so. As described above, students who attempted to explain most of the target concepts (regardless of language used) significantly outperformed those who attempted to explain very few target concepts using no formal language. Study 2 expands on this work by exploring the growth of formal and informal mathematics language use across multiple content units and its impact on the effectiveness of self-explanation prompts to improve procedural knowledge.

Siegler’s (1996Siegler, R. S. (1996). Emerging minds: The process of change in children's thinking. New York, NY: Oxford University Press.) Overlapping Wave Theory (OWT), used to frame this analysis, is based on three assumptions: 1) at any point in time, students use a variety of strategies to solve a specific problem, 2) these various methods constantly compete with each other, and 3) cognitive development involves the gradual change in the rate at which each method is used. The OWT model will help illustrate the development of mathematics language from the use of informal expressions to more formal expressions of mathematical concepts. It is illogical to assume that a student will shift from always using informal language to always using formal language. This “stage-like” model does not accurately represent the gradual nature of development. The OWT model allows for the overlap in types of language use.

When solving a problem, a person must explore the range of possible solution strategies, then choose the most appropriate one depending on the particular goal (Bransford & Stein, 1984Bransford, J. D. & Stein, B. S. (1984). The ideal problem solver. A guide for improving thinking, learning and creativity. A Series of Books in Psychology, New York: Freeman, 1984, 1.). We can relate these problem-solving principles to describe our phenomenon. When it comes to self-explanation, the goal is to correctly explain a concept. There are two possible solution strategies, (1) the student can describe the concept using formal mathematical terminology or (2) the student can use informal, everyday language to describe the concept. The student must choose which strategy to use based on what they feel will be the most effective at that given point in time.

Schleppegrell (2007Schleppegrell, M. J. (2007). The linguistic challenges of mathematics teaching and learning: A research review. Reading & Writing Quarterly, 23(2), 139-159.) states “learning the mathematics register takes time, and teachers need to set goals that scaffold the development of precise ways of using language over lessons and units of study” (p. 154). A great deal of research has focused on the complexity of vocabulary development. One of the main aspects of this complexity is its incremental development, which is the notion that the development of word knowledge is not an all-or-nothing process – it is acquired at many degrees (Nagy & Scott, 2000Nagy, W. E. & Scott, J. A. (2000). Vocabulary processes. In M. L. Kamil, P. B. Mosenthal, P. D. Pearson & R. Barr (eds.). Handbook of reading research, Volume 3 (pp. 269-284). Mahwah, New Jersey: Lawrence Erlbaum Associates. ). The incremental nature of general vocabulary development can be related to the development of mathematics vocabulary as well. For instance, Lansdell (2010Lansdell, J. M. (2010). Introducing young children to mathematical concepts: Problems with ‘new’ terminology. Educational Studies, 25(3), 327-333.) conducted a qualitative case study with a 5-year-old student, focusing on her developing understanding of the concept change in relation to her use of the word change. Lansdell (2010Lansdell, J. M. (2010). Introducing young children to mathematical concepts: Problems with ‘new’ terminology. Educational Studies, 25(3), 327-333.) concluded that at first the student was able to answer questions about the concept change by using informal language, then she began to use the formal term incorrectly, next she used the word in a general sense (e.g., there will be some change), finally she began to use the formal word accurately. While this study was conducted on a single 5-year-old student, it demonstrates the development of conceptual understanding of a term in conjunction with the use of informal and formal expressions of that concept. This suggests that allowing for the gradual transition from informal to formal use of terminology is more natural than a sharp transition.

Thus, the purpose of this study was to first determine if there are various formal and informal language use growth trajectories, and then to determine if those trajectories predict procedural knowledge gains. Our research questions are: When self-explaining, do distinct mathematics language use growth trajectories exist? If so, is there a significant difference in students’ procedural knowledge gains among these groups?

Participants

The data of 66 students was analyzed for the purposes of this analysis. The original sample from a larger study (published elsewhere) consisted of 258 students from 14 classrooms (12 teachers from five school districts across the United States) who had been randomly assigned to the example-based condition. Due to nature of the growth curve model (GCM) analysis, the analysis used in this study, and the length of time it takes to code each item, data from a random sample of students was used. It is suggested that the appropriate sample size is greater than or equal to 50 divided by the number of time points (Singer & Willett, 2003Singer, J. D. & Willett, J. B. (2003). Applied longitudinal data analysis: Modeling change and event occurrence. NY: Oxford University Press.). This analysis consisted of four time points; therefore at least 13 students should be analyzed; however, in order to obtain a more representative sample, approximately 25% of the total available participants (n=66) were randomly selected (55% female, 18.3% LEP, 60% URM).

Procedure

As mentioned above, this analysis stems from a larger yearlong study. Similar to Study 1, the purpose of the larger study was to determine the effect of worked-examples and self-explanation prompts on algebra learning, however the intervention in this study lasted for an entire school year, rather than a single unit.

Assessment procedure. At the beginning of the school year, all students were given a pre-test assessing their pre-algebra knowledge. Throughout the school year, students were given four quarterly exams. The four exams contained the same 18 items, however teachers were asked to only assign the test items taught to date; therefore, students were not answering items containing content they were not already taught. This exam assessed both procedural and conceptual algebra knowledge. At the conclusion of the year, students were given a post-test, consisting of ten Algebra I standardized-test release items.

Intervention procedure. Throughout the school year, similar to Study 1, teachers taught the algebra content using their own typical teaching methods; they were also asked to sporadically assign the study-worksheets. Teachers gave their students up to 42 study-worksheets at times they deemed appropriate during the year. The teachers did not have to assign the worksheet if they did not cover that material in their curriculum, but they were asked to assign at least about 80% of the available worksheets. The worksheets followed the same structure as those in Study 1. However, rather than only covering the Graphing Linear Equations unit, these 42 worksheets covered the entire Algebra I curriculum.

Measures

Algebra procedural knowledge. The quarterly benchmark exams consisted of 18 items, each of which had multiple parts, yielding a total of 71 sub-items. Of these 71 sub-items, 25 measured students’ procedural knowledge of algebra content. All items were created by the researchers and were isomorphic to problems in the worksheets, which were representative of the types of problems found in Algebra I textbooks and taught in Algebra I courses, ensuring face validity. Algebra procedural knowledge scores were calculated for each quarter by dividing the number of correctly answered items by the total number of assigned items. Cronbach’s alpha indicated that the internal consistency of the measure was sufficient (α = .95). Quarterly procedural scores are correlated with post-test scores, r = .74, p< .001, showing good predictive validity.

Quality of self-explanation prompt responses. Each worksheet had between three to eight self-explanation prompts, yielding a total of 288 prompts. Similar to Study 1, items were not analyzed if they did not require the student to self-explain a concept in words. Eighty-two of the 288 self-explanation prompts were eligible for analysis. Again, due to the nature of GCM analysis and the timeliness of coding, a random selection of 40 items (ten items per quarter) were analyzed.

Similar to Study 1, in order to answer each self-explanation prompt correctly, the student needed to correctly describe a specific mathematical concept. The student could attempt to describe this concept using either formal or informal language. Similar to Study 1, each student response was coded for the use of these key concepts in terms of whether they attempted to use the formal or informal version of the concept. Using a coding manual created by both authors, all coding was conducted by a single coder, the first author, using the code-recode method.

Growth Trajectories

The first research question is: When self-explaining, do distinct vocabulary use growth trajectories exist? For this analysis, growth mixture modeling (GMM) using Mplus 7.2 software was used. For each mathematics language measure (formal and informal attempts), we ran a single-class model in order to determine the most appropriate change function (linear, quadratic or cubic). All factors were fixed at zero. FIML was used as the estimation method in order to handle missing data. Next, we determined if distinct growth trajectories existed within the best-fit change function model.

Formal Attempts. Based on the goodness-of-fit parameters, a quadratic model was used to determine the number of latent classes. See table 3 for fit statistics of formal attempt single-class models. Based on the criteria for goodness-of-fit, a two-class model is the best fit for this set of data. See table 4 for LCGM results. The best log likelihood value was replicated, indicating successful convergence. A random-intercept two-class model was also tested (BIC = -75.626; AIC = -101.902), however a fixed intercept was a better fit.

| Table 3. Fit Statistics for Single-Class Formal Attempt Models (n=66) | |||||||||||||||||||||||||||||||||||||||||||||

|

| Table 4. Formal Attempt Quadratic LCGMs | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Based on the two-class quadratic model, about two-thirds of the sample attempted to use formal expressions 40% of the time throughout the entire school year. The remaining one-third of the sample started the year attempting to use formal expressions about 25% of the time, dipping in attempts during the 2nd and 3rd quarters, and finally ended again at about 25%. See table 5 for model estimates.

| Table 5. Formal Attempt Two-Class Quadratic Model | ||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||

Informal Attempts. Based on the goodness-of-fit parameter, a cubic model was used to determine latent classes. See table 6 for fit statistics of the informal attempt single-class models. Based on the criteria for goodness-of-fit, a three-class cubic model is the best fit for this set of data. See table 7 for LCGM results. The best log likelihood value was replicated. A random-intercept three-class model was also tested (BIC = -50.315; AIC = -91.919), however a fixed-intercept model is a better fit.

| Table 6. Fit Statistics for Single-Class Informal Attempt Models (n=66) | |||||||||||||||||||||||||||||||||||||||||||||

|

| Table 7. Informal Attempt Cubic LCGMs | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Based on the three-class cubic model, it seems that the majority of the sample attempted to use informal expressions consistently throughout the school year; one group attempted them about 25% of the time, while the other group attempted to use them about 45% of the time. A small portion of the sample has a spike in informal attempts during the second quarter. See table 8 for model estimates.

| Table 8. Informal Attempt Three-Class Cubic Model | ||||||||||||||||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||||||||||||||||

Formal and informal attempt groups. In order to determine the relation between the use of formal and informal expressions, groups of students were created for each formal/informal growth trajectory combination. For instance, students from formal attempt class 1 and informal attempt class 1 became one group, while students from formal attempt class 1 and informal attempt class 2 became a second. Six new groups were created. See table 9 for a breakdown of the new Formal/Informal Attempt groups. Note that the mean mathematics language attempt scores used are based on these new subgroups, rather than original LCGM estimates.

| Table 9. Formal/Informal Attempt Groups | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The second research question is: Is there a significant difference in students’ procedural knowledge gains among these groups? As seen above, six groups of students exist. To assess whether these six groups were significantly different from one another, with regards to procedural knowledge gains, we ran a repeated-measure ANCOVA using group assignments as the grouping variable, teacher as the covariate and procedural knowledge scores at each quarter (Quarters 1 through 4) as the dependent variable. Group 3, which was made up of only one student, was eliminated in order to run post-hoc analyses.

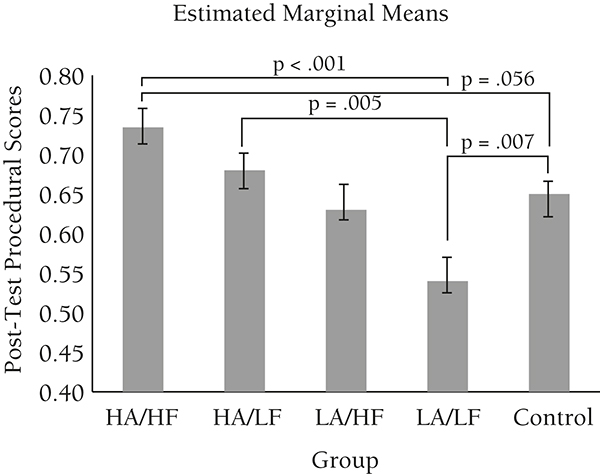

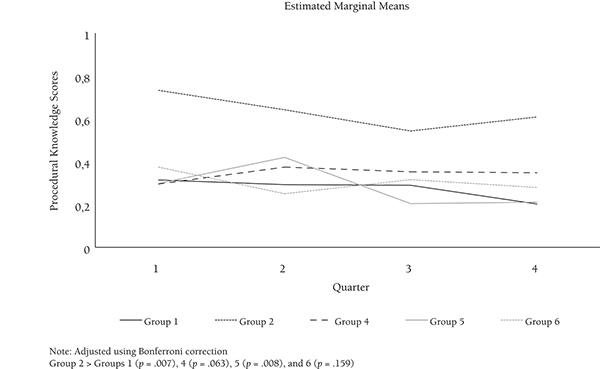

Tests of Between-Subject Effects found significant differences by group (F(4, 35) = 5.304, p = .002, partial η2 = .377) and by teacher (F(1, 35) = 4.169, p = .049, partial η2 = .106). Using Bonferroni correction, post hoc tests revealed that Group 2 outperformed the other groups. Group 2 is made up of those students who consistently attempted to use both formal and informal expressions throughout the entire school year; with no major dips or increases of attempts during any quarter. Students in this group attempted to use formal expressions slightly more often than informal expressions. See figure 3 for post hoc results.

|

Figure 3. Procedural knowledge growth by formal/informal attempt groups

|

The purpose of this paper was to explore the use of formal and informal mathematical language when self-explaining and its effect on mathematics procedural knowledge. The importance of precise mathematical communication is widely accepted among teachers, researchers, and policy makers. Often, the use of formal mathematical terminology is considered precise and a sign of sufficient conceptual understanding, while, the use of everyday language to reference mathematical concepts is less often seen as precise and may be a sign of incomplete understanding (Raiker, 2000; Zazkis, 2000Zazkis, R. (2000). Using code-switching as a tool for language mathematical language. For the Learning of Mathematics, 20(3), 38-43.). Since conceptual understanding is linked to procedural knowledge (Rittle-Johnson & Wagner, 1999Rittle-Johnson, B. & Alibali, M. W. (1999). Conceptual and procedural knowledge of mathematics: Does one lead to the other? Journal of Educational Psychology, 91(1), 175.), it would be expected that those who use formal language more often would have higher procedural knowledge scores compared to those who use informal language more often, however this was unfounded. When students explain their ideas to peers, they learn more (Webb et al., 1999Webb, N. M., Troper, J. D. & Fall, R. (1995). Constructive activity and learning in collaborative small groups. Journal of Educational Psychology, 87, 406-423.; Webb, 2009Webb, N. M. (2009). The teacher’s role in promoting collaborative dialogue in the classroom. British Journal of Educational Psychology, 79, 1-28.). Based on our results it seems that students may benefit regardless of the type of language used.

Study 1

We explored students’ use of language when answering self-explanation prompts. Two measures were used. The first calculated the number of times students attempted to use either formal or informal language to describe the target concept. The second calculated the percentage of times formal language was used when attempting to describe the target concept, capturing the difference between formal and informal language attempts. If the type of language used (formal or informal) when answering self-explanation prompts impacted students’ acquisition of procedural knowledge, then we would have expected to find differences between the high and low formal groups. However, this did not occur; no differences emerged between HA/HF and HA/LF or between LA/HF and LA/LF. However, attempting to describe the target concept (regardless of whether it is with formal or informal terms) does impact procedural knowledge gains; this is reflected in the significant differences found between HA/HF and LA/LF and between HA/LF and LA/LF. This finding is consistent with prior work showing that having students go through the process of self-explaining, independent of the quality of those explanations, yields benefits to learning (Chi, 2000Chi, M. T. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. Advances in Instructional Psychology, 5, 161-238.).

We also wished to determine whether any subgroup of students within the experimental condition outperformed the control group. The only subgroup to score significantly higher on procedural post-test scores compared to the control group was the HA/HF group. When students attempt to describe most of the target concepts (five of seven), using formal language 30% of the time and informal language 70% of the time, studying worked-examples and answering self-explanation prompts is more beneficial to learning than solving regular math problems alone.

While LEP was not included in the models because GLM results were not significant due to the small sample of LEP students, descriptive statistics reveal interesting trends (described in table 1). Of the LEP students in the experimental condition, all used a low percentage of formal language. This trend seems to be supported by the research findings of Planas (2014Planas, N. (2014). One speaker, two languages: Learning opportunities in the mathematics classroom. Educational Studies in Mathematics, 87(1), 51-66.), who suggest that LEP students struggle with the use of mathematics vocabulary.

Study 2

The purpose of this study was to determine whether there distinct language use growth trajectories and if those trajectories predict procedural knowledge gains. When exploring students’ attempt to explain a concept either formally or informally, six distinct groups emerged. As seen in table 9, the majority of students were from either Group 1, 4, or 5; however Group 2 outperformed all other groups on the mathematics procedural knowledge measure (see figure 3). It seems that using a consistent percentage of formal and informal expressions throughout the school year (using formal expressions slightly more often than informal) is associated with better procedural outcomes. Zazkis (2000Zazkis, R. (2000). Using code-switching as a tool for language mathematical language. For the Learning of Mathematics, 20(3), 38-43.) agrees that it may be “possible for a child to understand the idea of [a concept] without the ability to express this idea in an appropriately mathematical fashion” (p. 41). This would explain why both types of expressions predict learning. It seems that as long as students attempt to explain the target concept, they gain procedural knowledge through the use of self-explanation.

Limitations

It is unknown whether formal language might be more critical for other math content or in other points in the learning process. The Algebra I students in these studies were being exposed to the material for the first time, thus their use of language was likely influenced by the novelty of the terms. As students become more proficient with the material, they may be more likely to attempt to use related vocabulary, their ability to use the related terminology correctly may increase, and their ability to connect this knowledge to solve relevant problems may improve.

Since the purpose of the original study was not to explore vocabulary use, a strong emphasis was not put on the completion of self-explanation prompts. Students were not given any incentive to put effort into answering the prompts to the best of their ability, resulting in a large amount of unanswered or partially answered prompts. In the future, researchers should create a study that is designed to specifically explore students’ language use, using a larger sample size and utilizing multiple coders, which are both also limitations to the studies presented in this paper.

Furthermore, teachers were given the opportunity to choose which worksheets to assign to their students and were able to assign them in any order. While this methodology was used due to participating teachers coming from different school districts with different content schedules, this made it difficult to compare across mathematical concepts. In order to gain a better understanding of students’ language use by concept, in the future, all students should be asked to explain the same set of concepts in the same order throughout the school year.

Finally, it is important to note that while we focus on the utility of mathematical language and its relation to procedural knowledge, there are many other reasons for acquiring an understanding of the formal mathematics terminology used in the classroom. For example, the use of mathematical language influences the power positions within the classroom (McBride, 1989McBride, M. (1989). A foundational analysis of mathematical discourse. For the Learning of Mathematics, 40-46.), comprehension of assessment questions (DiGisi & Fleming, 2005DiGisi, L. L. & Fleming, D. (2005). Literacy specialists in math class! Closing the achievement gap on state math assessments. Voices from the Middle, 13(1), 48-52.) and interpretation of texts (Zevenbergen, 2001Zevenbergen, R. (2001). Mathematical literacy in the middle years. Literacy Learning: The Middle Years, 9(2), 21-28.). In the present studies, we did not test for impacts on any of these other factors.

Final Thoughts

It is well established that conceptual understanding is linked to procedural knowledge (Rittle-Johnson & Wagner, 1999Rittle-Johnson, B. & Alibali, M. W. (1999). Conceptual and procedural knowledge of mathematics: Does one lead to the other? Journal of Educational Psychology, 91(1), 175.). If the use of precise language is a sign of sufficient conceptual understanding, we expect that precise mathematics language use will predict procedural knowledge. While students are expected to explain their reasoning precisely, there is not a consensus on what precise mathematics language sounds like. Can students explain mathematical ideas precisely using both everyday language and formal mathematics language? Based on this analysis, it seems that at the Algebra I level, the key to procedural knowledge gains through explaining worked-examples may be simply attempting to explain the correct concept, regardless of the type of language used. Study 1 reveals that students who attempt to explain most of the target concepts (both low and high formal attempt groups) score significantly higher than those who attempt a very low number of target concepts using only informal language. Study 2 reveals that those who consistently attempt to use both formal and informal language throughout the school year score significantly higher throughout the school year on procedural knowledge measures.

These findings are consistent with prior work showing that having students go through the process of self-explaining, independent of the quality of those explanations, yields benefits to learning (Chi, 2000Chi, M. T. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. Advances in Instructional Psychology, 5, 161-238.). Though teachers should still use formal language in their classrooms, they should not be discouraged if students initially do not attempt to use formal language consistently. We suggest that teachers allow students to explain their reasoning using either formal or informal terms, especially while students are in the midst of developing an understanding of the mathematical concepts.

| ○ | Barwell, R. A. (2005). Ambiguity in the mathematics classroom. Language and Education, 19(2), 117-125. |

| ○ | Barwell, R. A. (2013). The academic and the everyday in mathematicians’ talk: The case of the hyper-bagel. Language and Education, 27(3), 207-222. |

| ○ | Barwell, R. A. (2016). Formal and informal mathematical discourses: Bakhtin and Vygotsky, dialogue and dialectic. Educational Studies in Mathematics, 92(3), 331-345. |

| ○ | Berthold, K., Eysink, T. H. S. & Renkl, A. (2009). Assisting self-explanation prompts are more effective than open prompts when learning with multiple representations. Instructional Science, 37, 345-363. |

| ○ | Booth, J. L. (2011). Why can’t students get the concept of math? Perspectives on Language and Literacy, Spring 2011, 31-35. |

| ○ | Booth, J. L., Lange, K. E., Koedinger, K. R. & Newton, K. J. (2013). Using example problems to improve student learning in algebra: Differentiating between correct and incorrect examples. Learning and Instruction, 25, 24-34. |

| ○ | Boulet, G. (2007). How does language impact the learning of mathematics? Let me count the ways. Journal of Teaching and Learning, 5(1), 1-12. |

| ○ | Bransford, J. D. & Stein, B. S. (1984). The ideal problem solver. A guide for improving thinking, learning and creativity. A Series of Books in Psychology, New York: Freeman, 1984, 1. |

| ○ | Chi, M. T. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. Advances in Instructional Psychology, 5, 161-238. |

| ○ | Common Core State Standards Initiative (2010). Common Core State Standards for Mathematics. Washington, DC: National Governors Association Center for Best Practices and the Council of Chief State School Officers. |

| ○ | Department for Education and Employment (DfEE) (1999). The National Numeracy Strategy: Framework for Teaching Mathematics from Reception to Year 6. London: DfEE. |

| ○ | DiGisi, L. L. & Fleming, D. (2005). Literacy specialists in math class! Closing the achievement gap on state math assessments. Voices from the Middle, 13(1), 48-52. |

| ○ | Große, C. S. & Renkl, A. (2007). Finding and fixing errors in worked examples: Can this foster learning outcomes? Learning & Instruction, 17, 612-634. |

| ○ | Lansdell, J. M. (2010). Introducing young children to mathematical concepts: Problems with ‘new’ terminology. Educational Studies, 25(3), 327-333. |

| ○ | Leung, C. (2005). Mathematical vocabulary: Fixers of knowledge or points of exploration? Language and Education, 19(2), 127-135. |

| ○ | Matthews, P. & Rittle-Johnson, B. (2009). In pursuit of knowledge: Comparing self-explanations, concepts, and procedures as pedagogical tools. Journal of Experimental Child Psychology, 104(1), 1-21. |

| ○ | McBride, M. (1989). A foundational analysis of mathematical discourse. For the Learning of Mathematics, 40-46. |

| ○ | McEldoon, K. L., Durkin, K. L. & Rittle-Johnson, B. (2013). Is self-explanation worth the time? A comparison to additional practice. British Journal of Educational Psychology, 83(4), 615-632. |

| ○ | Moschkovich, J. N. (2002). An introduction to examining everyday and academic mathematical practices. Journal for Research in Mathematics Education Monograph Series, 1-11. |

| ○ | Nagy, W. E. & Scott, J. A. (2000). Vocabulary processes. In M. L. Kamil, P. B. Mosenthal, P. D. Pearson & R. Barr (eds.). Handbook of reading research, Volume 3 (pp. 269-284). Mahwah, New Jersey: Lawrence Erlbaum Associates. |

| ○ | Ontario Ministry of Education (OME) (2005). Mathematics curriculum grades 1.8. Ottawa, ON: Queen’s Printer for Ontario. |

| ○ | Planas, N. (2014). One speaker, two languages: Learning opportunities in the mathematics classroom. Educational Studies in Mathematics, 87(1), 51-66. |

| ○ | Raiker, A. (2002). Spoken language and mathematics. Cambridge Journal of Education, 32(1), 45-60. |

| ○ | Rittle-Johnson, B. (2006). Promoting transfer: Effects of self-explanation and direct instruction. Child Development, 77(1), 1-15. |

| ○ | Rittle-Johnson, B. & Alibali, M. W. (1999). Conceptual and procedural knowledge of mathematics: Does one lead to the other? Journal of Educational Psychology, 91(1), 175. |

| ○ | Rittle-Johnson, B., Loehr, A. M. & Durkin K. (2017). Promoting self-explanation to improve mathematics learning: A meta-analysis and instructional design principles. ZDM, 49(4), 599-611. |

| ○ | Schleppegrell, M. J. (2007). The linguistic challenges of mathematics teaching and learning: A research review. Reading & Writing Quarterly, 23(2), 139-159. |

| ○ | Siegler, R. S. (1996). Emerging minds: The process of change in children's thinking. New York, NY: Oxford University Press. |

| ○ | Singer, J. D. & Willett, J. B. (2003). Applied longitudinal data analysis: Modeling change and event occurrence. NY: Oxford University Press. |

| ○ | Webb, N. M. (2009). The teacher’s role in promoting collaborative dialogue in the classroom. British Journal of Educational Psychology, 79, 1-28. |

| ○ | Webb, N. M., Troper, J. D. & Fall, R. (1995). Constructive activity and learning in collaborative small groups. Journal of Educational Psychology, 87, 406-423. |

| ○ | Zazkis, R. (2000). Using code-switching as a tool for language mathematical language. For the Learning of Mathematics, 20(3), 38-43. |

| ○ | Zevenbergen, R. (2001). Mathematical literacy in the middle years. Literacy Learning: The Middle Years, 9(2), 21-28. |

Comunicación precisa de matemáticas: el uso del lenguaje formal e informal

INTRODUCCIÓN. Al explicar su razonamiento, los estudiantes deben comunicar su pensamiento matemático con precisión, sin embargo, no está claro si la terminología formal es necesaria o si los estudiantes pueden explicar el uso del lenguaje cotidiano para describir conceptos matemáticos. Este documento informa sobre los resultados de dos estudios. El primero explora la relación entre el uso de los estudiantes del lenguaje de las matemáticas formal e informal y el conocimiento de los procedimientos matemáticos; el segundo replica y extiende estos hallazgos usando un diseño longitudinal a lo largo de todo un año escolar. MÉTODO. El estudio 1 utiliza un método de prueba previa, intervención y prueba posterior en una unidad de estudio. El estudio 2 utiliza el mismo método de prueba previa, intervención y posprueba, pero implementa un diseño longitudinal a lo largo de todo un año escolar, utilizando el análisis de la curva de crecimiento. RESULTADOS. Los resultados muestran que los estudiantes se benefician más cuando intentan describir los conceptos matemáticos objetivos, independientemente del tipo de lenguaje utilizado. Este hallazgo es consistente con el trabajo previo que muestra que hacer que los estudiantes pasen por el proceso de autoexplicación, independientemente de la calidad de esas explicaciones, produce beneficios para el aprendizaje (Chi, 2000Chi, M. T. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. Advances in Instructional Psychology, 5, 161-238.). DISCUSIÓN. Aunque los maestros todavía deben usar el lenguaje formal en sus clases, no deben desalentarse si los estudiantes inicialmente no pueden usar el lenguaje formal correctamente. Sugerimos que los maestros les permitan a los estudiantes explicar su razonamiento usando términos formales o informales, especialmente mientras los estudiantes están en el medio de desarrollar una comprensión de los conceptos matemáticos.

Palabras clave: Instrucción de matemáticas, Comunicación, Idioma, Álgebra.

Communication mathematique precise: l’utilisation du langage formel et informel

INTRODUCTION. Lorsqu’ils expliquent leur raisonnement, les élèves doivent communiquer leur pensée mathématique avec précision. Pourtant il n’est pas clair si une terminologie formelle est nécessaire ou si les élèves peuvent s’expliquer en utilisant le langage courant pour décrire des concepts mathématiques. Dans cet article on montre les résultats de deux études. Le premier étude ici analysé explore la relation entre l’utilisation par les élèves du langage mathématique formel et informel ainsi que les connaissances procédurales en mathématiques. Le seconde étude ici analysé reproduit et prolonge ces résultats en utilisant une conception longitudinale au cours de toute une année scolaire. MÉTHODES. L’étude 1 utilise une méthode pré-test, intervention, post-test à travers d’une unité d’étude. L’étude 2, utilisant la même méthode de pré-test, d’intervention et de post-test, met toutefois en œuvre un plan longitudinal sur toute une année scolaire en utilisant l’analyse de la courbe de croissance. RÉSULTATS. Les résultats montrent que les élèves en tirent la meilleure parti lors qu’ils tentent de décrire les concepts mathématiques cibles, quel que soit le type de langage utilisé. Cette constatation est cohérente avec les travaux antérieurs montrant que le fait que les élèves passent par le processus d’auto-explication, avec indépendance de la qualité de ces explications, présente des avantages pour l’apprentissage (par exemple, Chi, 2000Chi, M. T. (2000). Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. Advances in Instructional Psychology, 5, 161-238.). DISCUSSION. Bien que les enseignants devraient toujours utiliser un langage formel dans leurs salles de classe, ils ne devraient pas être découragés si les étudiants sont au départ incapables d’utiliser correctement un langage formel. Nous suggérons que les enseignants permettent aux élèves d’expliquer leur raisonnement en utilisant des termes soit formels soit informels, en particulier lorsque les élèves sont en train de développer une compréhension des concepts mathématiques.

Mots-clés: Cours de mathématique, La communication, La langue, Algèbra.

Kelly M. McGinn (corresponding author)

Assistant Professor of Educational Psychology at Temple University. She holds a BA in Psychology from the Catholic University of America, a certification in both secondary general science and mathematics from the University of Pennsylvania, and a PhD in Educational Psychology from Temple University. Her research focuses on the development of mathematics conceptual understanding, and is especially interested in the relation between the use of mathematical language and conceptual development.

Correspondence address: Temple University. 1801 N Broad St, Philadelphia, PA 19122 USA

E-mail: kelly.mcginn@temple.edu

Julie L. Booth

Associate Professor of Educational Psychology at Temple University, and the Associate Dean of Undergraduate Education for the College of Education. Her research interests lie in translating between cognitive science/cognitive development and education by finding ways to bring laboratory tested cognitive principles to real-world classrooms, identifying prerequisite skills and knowledge necessary for learning, and examining individual differences in the effectiveness of instructional techniques based on learner characteristics.